Rice University engineers are building a flat microscope, called FlatScope, and developing software that can decode and trigger neurons on the surface of the brain.

Their goal as part of a new government initiative is to provide an alternate path for sight and sound to be delivered directly to the brain.

The project is part of a $65 million effort announced this week by the federal Defense Advanced Research Projects Agency (DARPA) to develop a high-resolution neural interface. Among many long-term goals, the Neural Engineering System Design (NESD) program hopes to compensate for a person's loss of vision or hearing by delivering digital information directly to parts of the brain that can process it.

Members of Rice's Electrical and Computer Engineering Department will focus first on vision. They will receive $4 million over four years to develop an optical hardware and software interface. The optical interface will detect signals from modified neurons that generate light when they are active. The project is a collaboration with the Yale University-affiliated John B. Pierce Laboratory led by neuroscientist Vincent Pieribone.

Current probes that monitor and deliver signals to neurons -- for instance, to treat Parkinson's disease or epilepsy -- are extremely limited, according to the Rice team. "State-of-the-art systems have only 16 electrodes, and that creates a real practical limit on how well we can capture and represent information from the brain," Rice engineer Jacob Robinson said.

Robinson and Rice colleagues Richard Baraniuk, Ashok Veeraraghavan and Caleb Kemere are charged with developing a thin interface that can monitor and stimulate hundreds of thousands and perhaps millions of neurons in the cortex, the outermost layer of the brain.

"The inspiration comes from advances in semiconductor manufacturing," Robinson said. "We're able to create extremely dense processors with billions of elements on a chip for the phone in your pocket. So why not apply these advances to neural interfaces?"

Kemere said some teams participating in the multi-institution project are investigating devices with thousands of electrodes to address individual neurons. "We're taking an all-optical approach where the microscope might be able to visualize a million neurons," he said.

That requires neurons to be visible. Pieribone's Pierce Lab is gathering expertise in bioluminescence --think fireflies and glowing jellyfish -- with the goal of programming neurons with proteins that release a photon when triggered. "The idea of manipulating cells to create light when there's an electrical impulse is not extremely far-fetched in the sense that we are already using fluorescence to measure electrical activity," Robinson said.

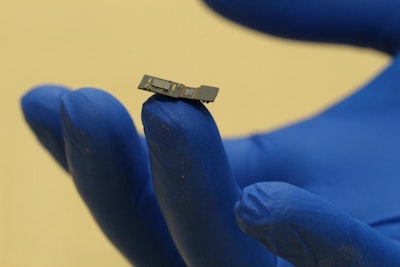

The scope under development is a cousin to Rice's FlatCam, developed by Baraniuk and Veeraraghavan to eliminate the need for bulky lenses in cameras. The new project would make FlatCam even flatter, small enough to sit between the skull and cortex without putting additional pressure on the brain, and with enough capacity to sense and deliver signals from perhaps millions of neurons to a computer.

Alongside the hardware, Rice is modifying FlatCam algorithms to handle data from the brain interface.

"The microscope we're building captures three-dimensional images, so we'll be able to see not only the surface but also to a certain depth below," Veeraraghavan said. "At the moment we don't know the limit, but we hope we can see 500 microns deep in tissue."

"That should get us to the dense layers of cortex where we think most of the computations are actually happening, where the neurons connect to each other," Kemere said.

A team at Columbia University is tackling another major challenge: The ability to wirelessly power and gather data from the interface.

In its announcement, DARPA described its goals for the implantable package. "Part of the fundamental research challenge will be developing a deep understanding of how the brain processes hearing, speech and vision simultaneously with individual neuron-level precision and at a scale sufficient to represent detailed imagery and sound," according to the agency. "The selected teams will apply insights into those biological processes to the development of strategies for interpreting neuronal activity quickly and with minimal power and computational resources."

"It's amazing," Kemere said. "Our team is working on three crazy challenges, and each one of them is pushing the boundaries. It's really exciting. This particular DARPA project is fun because they didn't just pick one science-fiction challenge: They decided to let it be DARPA-hard in multiple dimensions."