The NFL is continuing to crowdsource new ways to track head and helmet impacts during games from data scientists and for the second straight year the winner of its artificial intelligence competition comes from outside the United States.

The NFL and Amazon Web Services awarded $100,000 in prizes for this year’s competition with the top prize of $50,000 going to Kippei Matsuda from Osaka, Japan, the league announced Friday.

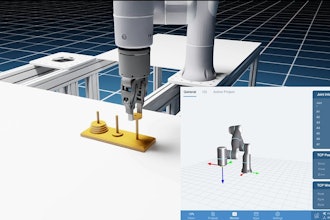

The task for Matsuda and the rest of the data scientists who took part was to use artificial intelligence to create models that would detect helmet impacts from NFL game footage and identify the specific players involved in those impacts.

NFL executive vice president Jeff Miller, who oversees health and safety, said the league started manually tracking helmet impacts for a small number of games a few years ago.

The tedious task of tracking every helmet collision, especially along the line of scrimmage, made it difficult to do more than just a small sampling of games as the league tried to gather more data on head impacts.

By sharing game film and information with the data science community, the league is hoping to continue developing better systems that can track those impacts more efficiently. The league estimates Matsuda’s winning system could detect and track helmet impacts with greater accuracy and 83 times faster than a person working manually.

“There were certainly any number of domestic participants too, but the data science community is large and looking for solutions in places or with communities you wouldn’t normally talk to may end up being a pretty fruitful exercise,” Miller said. “So I think we’ve proven that this model of working with the global data science community is helpful to us and will continue to be and we’ll continue to engage in.”

The first year of the competition in 2020 focused on models that detected all helmet impacts from NFL game footage. That competition was won by Dmytro Poplavskiy from Brisbane, Australia, which included nearly 7,800 submissions from 55 countries.

This year’s competition was focused more on specific player impacts and included 825 teams and 1,028 competitors from 65 countries, and a total of 12,600 submissions.

“This was the most exciting competition I’ve ever experienced,” Matsuda said in a statement. “It’s a very common task for computer vision to detect 2D images, but this challenge required us to consider higher dimensional data such as the 3D location of players on the field. NFL videos are also fun to watch, which is very important since we need to see the data again and again during competition. I would be honored if my AI can help improve the safety of NFL players.”

Miller said the goal of the league is to create a “digital athlete” that can become a virtual representation of the actions, movements and impacts an NFL player experiences on the field during a game and can be used to help predict and hopefully prevent injury in the future.

“That is novel for us and obviously has great importance in how we think about making the game safer for the athletes,” Miller said “It will have an effect on training and coaching, certainly. It will have an effect in rules without a doubt. It will definitely have an impact in terms of equipment, and benefits that we can see from equipment because now for the first time we’ll have a pretty good appreciation for every time somebody hits their head during the course of an NFL game, and therefore, we will look for ways to prevent many of those.”

Priya Ponnapalli, senior manager with Amazon’s Machine Learning Solutions Lab said the potential for machine learning to analyze past data but also make forward-looking projections will be helpful in the future in helping create a digital version of players at all positions and analyze the types of hits they take.

“Machine learning is a very intuitive process and you get to a certain level of performance, and in this case we’ve got some pretty accurate and comprehensive models,” Ponnapalli said. “And as we collect more data, these models are going to get better and better.”